Nvidia Cuda Installation Guide For Mac

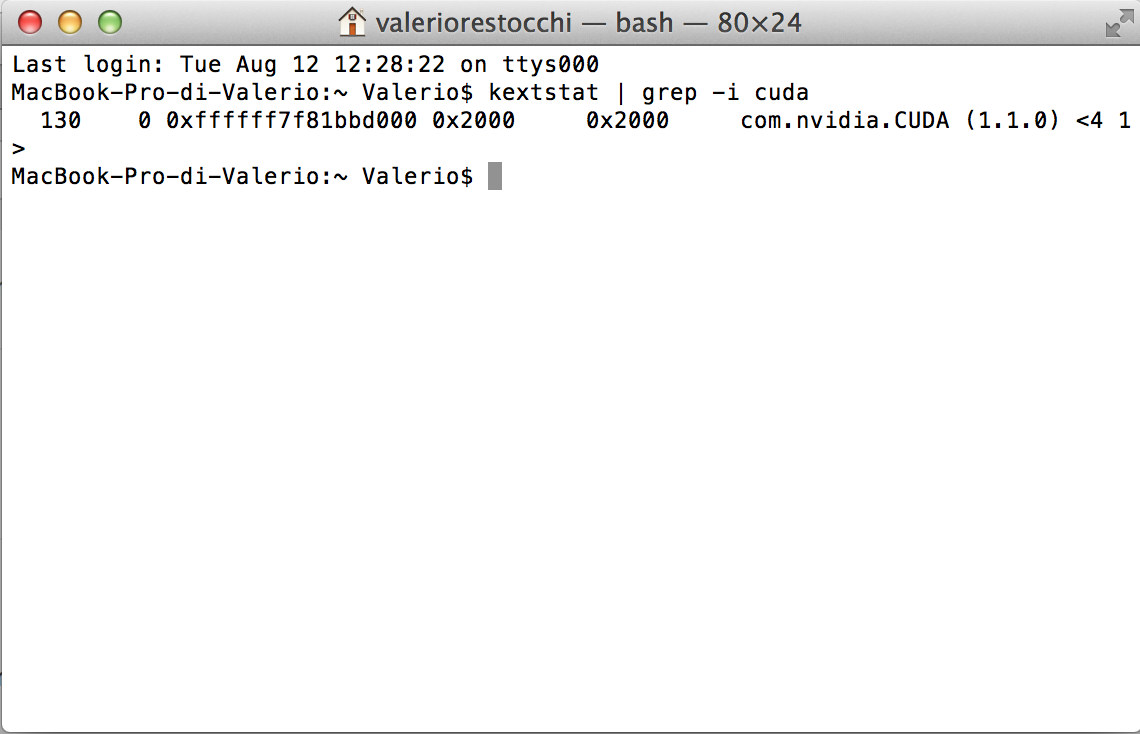

* Take note of the encircled 'NVIDIA CUDA Driver for MAC' as this is how I got the latest driver in step 4 below. Restart Step 4: Download the latest CUDA version. March 2018 Quicklink: NVIDIA DRIVERS Quadro & GeForce macOS Driver Release 378.10.10.10.20.109 And again for folks in the future: Please look at the screenshot above.

CUDA is supported since llvm 3.9. Current release of clang (7.0.0) supports CUDA 7.0 through 9.2. If you need support for CUDA 10, you will need to use clang built from r342924 or newer. Before you build CUDA code, you’ll need to have installed the appropriate driver for your nvidia GPU and the CUDA SDK. See for details.

Note that clang the CUDA toolkit as installed by many Linux package managers; you probably need to install CUDA in a single directory from NVIDIA’s package. CUDA compilation is supported on Linux. Compilation on MacOS and Windows may or may not work and currently have no maintainers.

Compilation with CUDA-9.x is. $ clang axpy.cu -o axpy -cuda-gpu-arch = -L/ -lcudartstatic -ldl -lrt -pthread $./axpy y0 = 2 y1 = 4 y2 = 6 y3 = 8 On MacOS, replace -lcudartstatic with -lcudart; otherwise, you may get “CUDA driver version is insufficient for CUDA runtime version” errors when you run your program. – the directory where you installed CUDA SDK. Typically, /usr/local/cuda.L/usr/local/cuda/lib64 if compiling in 64-bit mode; otherwise, pass e.g. (In CUDA, the device code and host code always have the same pointer widths, so if you’re compiling 64-bit code for the host, you’re also compiling 64-bit code for the device.) Note that as of v10.0 CUDA SDK. – the of your GPU. For example, if you want to run your program on a GPU with compute capability of 3.5, specify -cuda-gpu-arch=sm35.

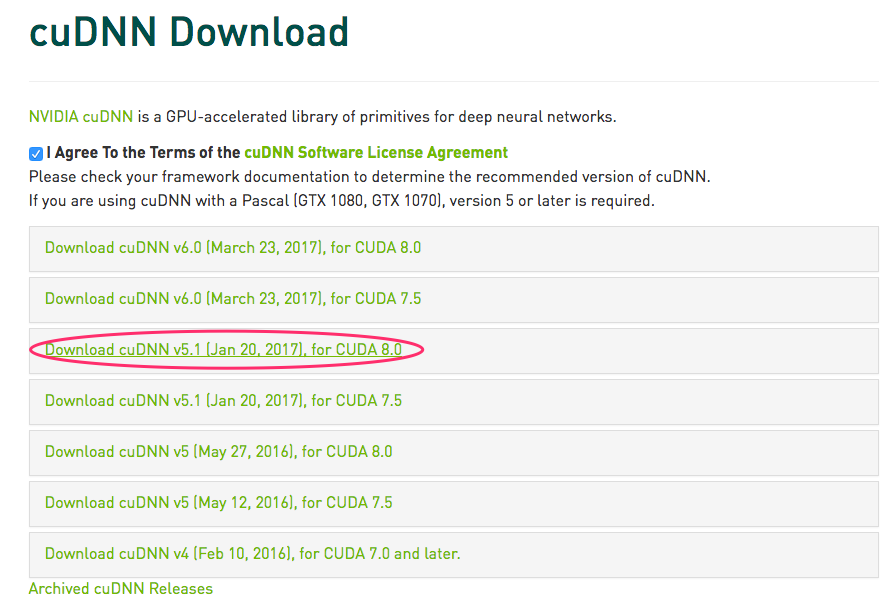

Nvidia Cuda Download

Note: You cannot pass computeXX as an argument to -cuda-gpu-arch; only smXX is currently supported. However, clang always includes PTX in its binaries, so e.g. A binary compiled with -cuda-gpu-arch=sm30 would be forwards-compatible with e.g. You can pass -cuda-gpu-arch multiple times to compile for multiple archs. The -L and -l flags only need to be passed when linking. When compiling, you may also need to pass -cuda-path=/path/to/cuda if you didn’t install the CUDA SDK into /usr/local/cuda or /usr/local/cuda-X.Y. Nvcc does not officially support std::complex.

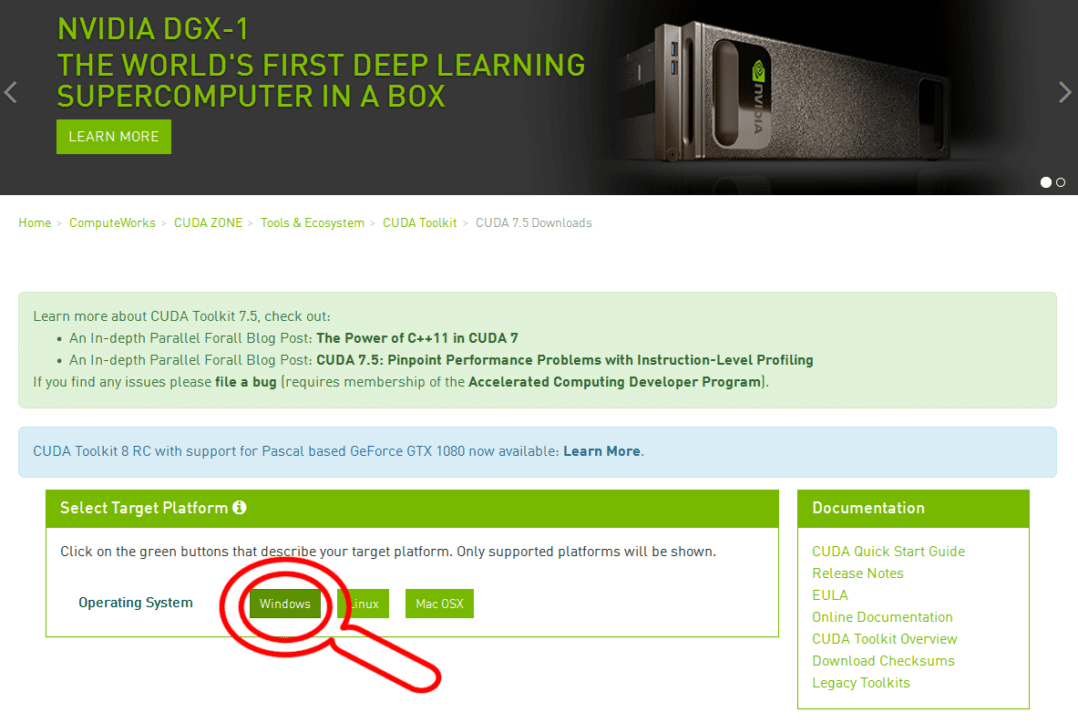

Install Cuda Mac

It’s an error to use std::complex in device code, but it often works in host device code due to nvcc’s interpretation of the “wrong-side rule” (see below). However, we have heard from implementers that it’s possible to get into situations where nvcc will omit a call to an std::complex function, especially when compiling without optimizations. As of 2016-11-16, clang supports std::complex without these caveats. It is tested with libstdc 4.8.5 and newer, but is known to work only with libc newer than 2016-11-16. Although clang’s CUDA implementation is largely compatible with NVCC’s, you may still want to detect when you’re compiling CUDA code specifically with clang.

This is tricky, because NVCC may invoke clang as part of its own compilation process! For example, NVCC uses the host compiler’s preprocessor when compiling for device code, and that host compiler may in fact be clang. When clang is actually compiling CUDA code – rather than being used as a subtool of NVCC’s – it defines the CUDA macro. CUDAARCH is defined only in device mode (but will be defined if NVCC is using clang as a preprocessor). So you can use the following incantations to detect clang CUDA compilation, in host and device modes. Run a preprocessor over the input.cu file to split it into two source files: H, containing source code for the host, and D, containing source code for the device.

For each GPU architecture arch that we’re compiling for, do:. Compile D using nvcc proper.

The result of this is a ptx file for Parch. Optionally, invoke ptxas, the PTX assembler, to generate a file, Sarch, containing GPU machine code (SASS) for arch. Invoke fatbin to combine all Parch and Sarch files into a single “fat binary” file, F. Compile H using an external host compiler (gcc, clang, or whatever you like). F is packaged up into a header file which is force-included into H; nvcc generates code that calls into this header to e.g. Launch kernels. Clang uses merged parsing.

This is similar to split compilation, except all of the host and device code is present and must be semantically-correct in both compilation steps. For each GPU architecture arch that we’re compiling for, do:. Compile the input.cu file for device, using clang. host code is parsed and must be semantically correct, even though we’re not generating code for the host at this time.

The output of this step is a ptx file Parch. Invoke ptxas to generate a SASS file, Sarch. Note that, unlike nvcc, clang always generates SASS code.

Invoke fatbin to combine all Parch and Sarch files into a single fat binary file, F. Compile H using clang. device code is parsed and must be semantically correct, even though we’re not generating code for the device at this time.

F is passed to this compilation, and clang includes it in a special ELF section, where it can be found by tools like cuobjdump. (You may ask at this point, why does clang need to parse the input file multiple times? Why not parse it just once, and then use the AST to generate code for the host and each device architecture?

Unfortunately this can’t work because we have to define different macros during host compilation and during device compilation for each GPU architecture.) clang’s approach allows it to be highly robust to C edge cases, as it doesn’t need to decide at an early stage which declarations to keep and which to throw away. But it has some consequences you should be aware of. D functions prefer to call other Ds. HDs are given lower priority. Similarly, H functions prefer to call other Hs, or global functions (with equal priority). HDs are given lower priority.

HD functions prefer to call other HDs. When compiling for device, HDs will call Ds with lower priority than HD, and will call Hs with still lower priority. If it’s forced to call an H, the program is malformed if we emit code for this HD function. We call this the “wrong-side rule”, see example below.

The rules are symmetrical when compiling for host. Some examples. Modern CPUs and GPUs are architecturally quite different, so code that’s fast on a CPU isn’t necessarily fast on a GPU.

Cuda For Mac

We’ve made a number of changes to LLVM to make it generate good GPU code. Among these changes are:. – These reduce redundancy within straight-line code. – This is mainly for promoting straight-line scalar optimizations, which are most effective on code along dominator paths. – In PTX, we can operate on pointers that are in a paricular “address space” (global, shared, constant, or local), or we can operate on pointers in the “generic” address space, which can point to anything. Operations in a non-generic address space are faster, but pointers in CUDA are not explicitly annotated with their address space, so it’s up to LLVM to infer it where possible. – This was an existing optimization that we enabled for the PTX backend.

64-bit integer divides are much slower than 32-bit ones on NVIDIA GPUs. Many of the 64-bit divides in our benchmarks have a divisor and dividend which fit in 32-bits at runtime. This optimization provides a fast path for this common case. Aggressive loop unrooling and function inlining – Loop unrolling and function inlining need to be more aggressive for GPUs than for CPUs because control flow transfer in GPU is more expensive. More aggressive unrolling and inlining also promote other optimizations, such as constant propagation and SROA, which sometimes speed up code by over 10x. (Programmers can force unrolling and inline using clang’s and attribute((alwaysinline)).).